AtomVM 2025 Year in Review

The last time I wrote a real AtomVM post (not counting quick updates) was 2018. Yep. Time flies when you end up developing such a crazy project like AtomVM. If you want a refresher on what AtomVM is and why it exists, here’s that old post.

One of my 2026 resolutions is “write more.” Hold me accountable.

I’m not going to try to summarize eight years of history in one shot, so this post is just a 2025 recap. A follow-up post will cover where AtomVM is going next.

But let’s say something before starting: 2025 has been amazing.

Such an amazing year wouldn’t be possible without amazing folks. AtomVM hasn’t been a solo project for a long time now. A lot of what you’ll read below happened because a bunch of smart people showed up, cared, argued about trade-offs and hacked. I’m grateful for that. I’m also grateful for Dashbit and Software Mansion contributions as well.

Alright. Let’s do the highlights, roughly in merge order.

Distributed Erlang

If you’ve used Erlang/OTP in production, you know the vibe: you don’t just build one node, you build a system. You spin up multiple nodes, connect them, and suddenly “send a message to that PID over there” feels as normal as sending one locally.

In Erlang terms, this is “distribution”: nodes speak the Erlang distribution protocol, and once connected you get familiar building blocks like message passing, monitoring and RPC across nodes.

Now AtomVM can join that party. An AtomVM node can either connect to a regular BEAM node, or to another AtomVM node.

Under the hood, AtomVM implements the distribution protocol (in a pragmatic, “start with the useful stuff” way), including epmd support (and even a pure Erlang epmd implementation you can run on embedded targets).

Why this is interesting on microcontrollers

On servers, distribution is about clustering, redundancy and scaling. On embedded, it unlocks weirder (and more fun) architecture patterns:

Multiple brains on the same board: imagine a PCB with multiple MCUs: one dedicated to IO, another for connectivity, another for UI. Distribution gives you a clean concurrency/message model across chips instead of inventing your own RPC protocol every time.

“Smart peripheral” mode: one AtomVM node can act like an IO expander with opinions: debouncing, buffering, safety checks and the rest of your system talks to it via messages.

Low-power domains: keep AtomVM running in an always-on low-power domain doing lightweight coordination, while the “big” part of the system sleeps until it’s needed.

Fault tolerant systems: you can build fault tolerant low power systems. On this paper, some interesting ideas are shared: https://dl.acm.org/doi/epdf/10.1145/3759161.3763048

Debugging and control from a real BEAM node: even if your product is embedded, it’s incredibly convenient to poke it from an OTP shell, run RPC calls, or build test harnesses that treat the device like “just another node”.

And here’s the part I really like: AtomVM supports the same concept of alternative carriers as Erlang/OTP distribution modules. Today distribution is over TCP/IP, but the model doesn’t have to stop there. If you’re the kind of person who thinks “why not Erlang distribution over SPI or I²C?”, you should definitely give AtomVM (and its distribution features) a try.

Is it complete? Not yet, there are limitations (e.g., cookie auth is there, TLS distribution isn’t yet). But it’s real, it works, and I can’t wait to see the strange things people build with it.

You can read more about Distributed Erlang here: https://doc.atomvm.org/main/distributed-erlang.html

Popcorn (Elixir in the browser… powered by AtomVM)

Popcorn was one of those new things that made me smile because it’s both obviously cool and unexpected.

Popcorn lets you run client-side Elixir in the browser, with JavaScript interoperability. Under the hood, it runs AtomVM compiled to WebAssembly, and wraps it with the tooling and APIs needed to build real front-end experiences.

To be super clear: Popcorn isn’t AtomVM. It’s a separate project built on top of AtomVM. But getting AtomVM to a place where Popcorn could run “serious” Elixir workloads required a lot of work across the VM and its libraries.

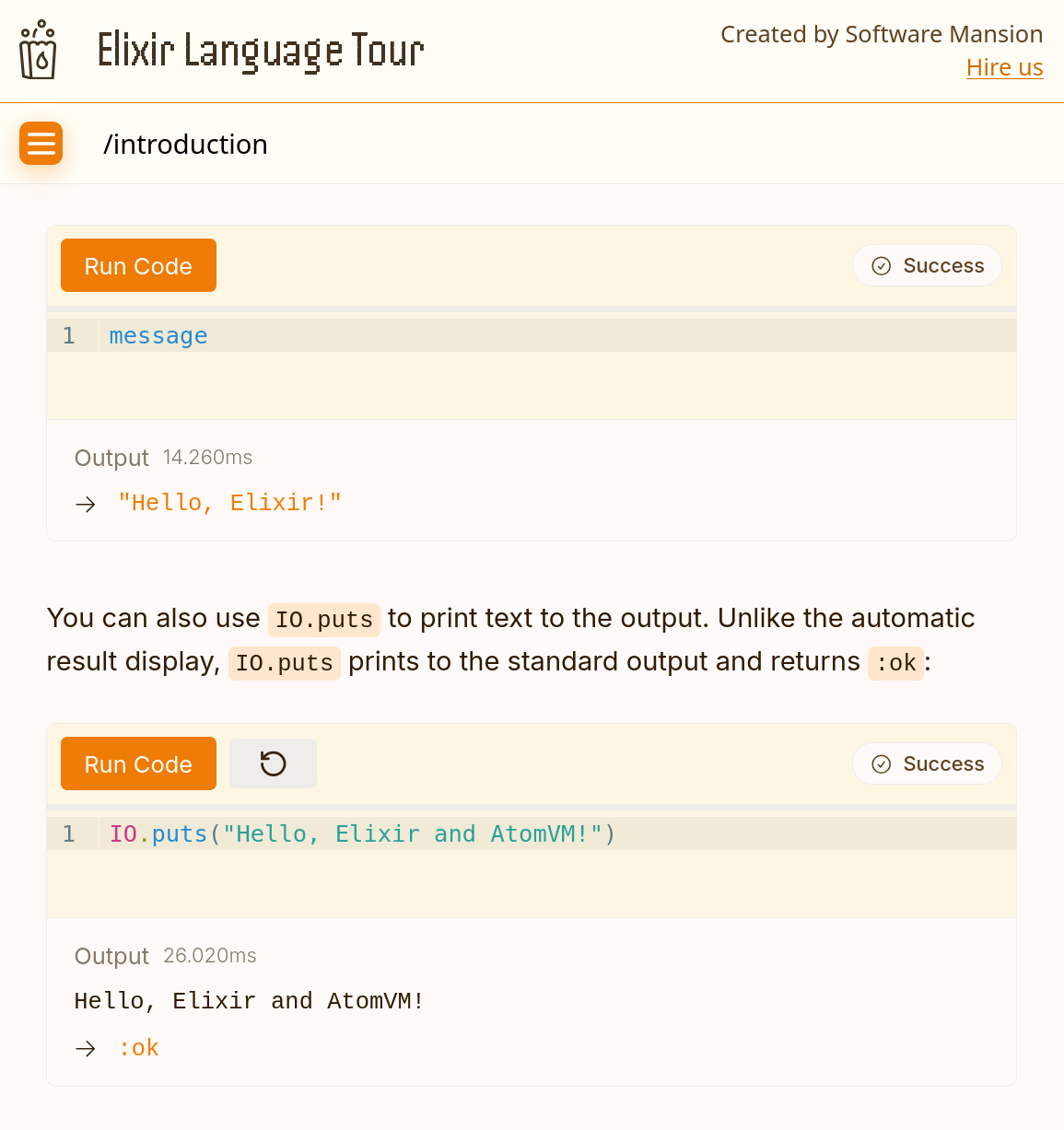

Speaking of simple but effective applications, the Elixir Language Tour is one of them: you open a web page and you can start learning Elixir immediately, no local install required, just type and run. That’s a big deal for learning and onboarding.

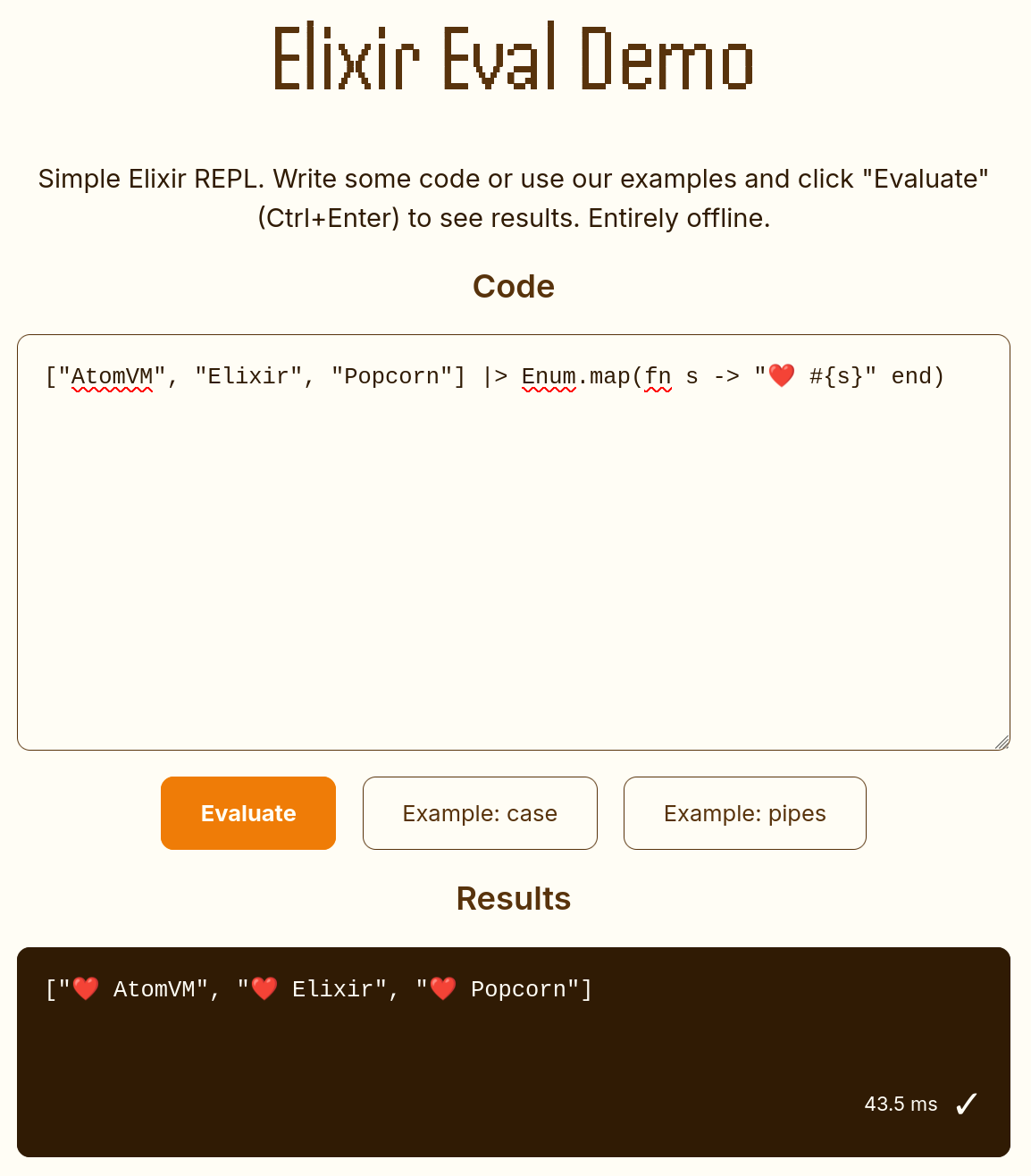

Another amazing demo of this technology is the REPL running on the browser, that is also an interesting benchmark of the kind of complex applications AtomVM is now able to run.

You can read more about Popcorn here: https://popcorn.swmansion.com/

Just-in-Time and Ahead-of-Time

AtomVM’s bread and butter has always been: run BEAM bytecode on constrained devices. That’s still true.

But bytecode interpretation has an obvious ceiling: if you need more speed, you eventually want native code.

In 2025, AtomVM gained full JIT/AoT machinery with a very interesting twist: the JIT compiler is written in Erlang. The code server compiles bytecode to native code and then resumes the trapped process.

So now AtomVM supports not one, but four execution modes:

Emulated (default): interpret BEAM bytecode (small and portable). This is what AtomVM has been doing since 2017.

JIT: run only native code; compile at runtime (requires more memory)

Native (AoT): precompile on your desktop/CI, ship native code to the target (no JIT compiler needed on the device).

Hybrid: run native code when available, fall back to emulation for the rest.

This is still “new and sharp” but it opens a path where AtomVM can keep its tiny-footprint story and offer an escape hatch when you need serious performance.

If you want to give it a try, you can do it on devices with a supported architecture, such as riscv32 (ESP32-P4, ESP32-C family, etc…), armv6m (RaspberryPi Pico) or your desktop (both x86_64 and aarch64).

You can read more about JIT here: https://doc.atomvm.org/main/atomvm-internals.html#jit-and-native-code-execution

Big Integers

At some point in the past, I said big integers were out of scope for AtomVM.

That actually didn’t age well.

The reason is simple: 64-bit signed integers are fine… until they aren’t. The moment you talk to the outside world (like when parsing a protobuf coming from a C application, that has support for uint64), you may run into values that don’t fit neatly in int64.

So AtomVM now supports big integers up to 256 bits (a sign bit + 256-bit magnitude), including in arithmetic, bitwise and code/decode functions.

In practice, that means you can represent integers in the -(2²⁵⁶ — 1)..(2²⁵⁶ — 1) range.

For scale: estimates for the number of atoms in the observable universe are often around 10⁸⁰. So no, we still can’t assign a unique integer ID to every atom in the universe… but we can label at least each atom of our galaxy and the local group as well, and have plenty of integer IDs remaining.

So let’s say that 2²⁵⁷ integers ought to be enough for anybody, and let’s hope this will age better.

You can read more about big integers here: https://doc.atomvm.org/main/differences-with-beam.html#integer-precision-and-overflow

The “boring” stuff (that made everything else possible)

A lot of 2025 was also unglamorous but essential: filling in missing OTP/library pieces, improving ETS behavior, tightening compatibility, making the emscripten port happier, etc. In particular, AtomVM added OTP-28 support in the main branch, and there’s a long tail of other small additions that were needed for things like Popcorn and running the Elixir compiler.

If you enjoy scrolling changelogs (no judgment), here is the rabbit hole.

Is AtomVM ready for production?

Mostly yes (depending on what you mean by “production”)

AtomVM is still v0.x, which means APIs can move (that’s basically the meaning of v0 in semantic versioning jargon after all). Also not every OTP feature is yet implemented (and some of them will never be implemented for some very good reasons).

That said, AtomVM isn’t a prototype anymore: I’m aware of projects using it in production (or projects in the making with that purpose). And there is also a stable v0.6.x series that is the place to be if you want something conservative. And speaking of which, the latest stable release is v0.6.6, which has been through several rounds of bugfixes (sadly it does not include OTP-28 support, that is main branch only).

In other words, this means we are already following the good practice of having a maintenance branch, good for any long term project.

If you want the shiny stuff from this post (OTP-28 support, big ints, lots of new library bits, etc.), that’s in main, which comes with the usual “development branch” caveats.

Tooling-wise: we can definitely do better (we always can), but it’s already good enough to build real applications without losing your mind.

Actually speaking of Elixir tooling, if you have not yet flashed an ESP32 device, you can download a released version from the internet and you can flash it without even installing esp-idf (we are using pythonx under the hood, thankfully 2025 has been the year of interoperability).

By the way, if you want to share any story of AtomVM in production, let me know.

What’s next in 2026?

That’s the next post.

I’ll talk about where AtomVM is heading, what I’m excited about and more. For now, if you’re building something with AtomVM, if you have a delightfully cursed idea for distributed Erlang over a non-IP transport, or you want to share any thoughts, I’d genuinely love to hear about it.

Meanwhile, if you’re attending FOSDEM 2026, I’ll be giving a talk about AtomVM.

Support my work on AtomVM: sponsor me on GitHub ❤️